The key of Successful Deepseek China Ai

페이지 정보

작성자 Velda Reitz 작성일25-03-05 12:25 조회2회 댓글0건본문

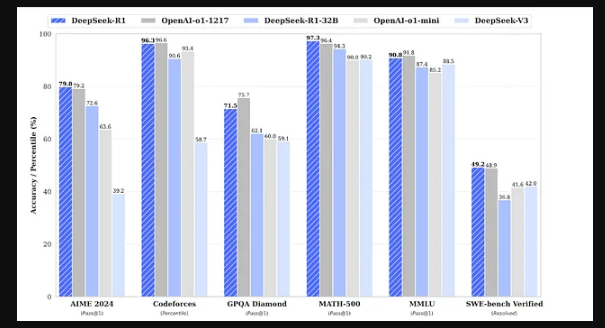

For all latest news, observe The Daily Star's Google News channel. Deepseek free’s fast adoption and performance in opposition to competitors resembling OpenAI and Google sent shockwaves by way of the tech trade. "DeepSeek is pretty much the primary massive chatbot from outside the American Big Tech sector … With the ability to generate main-edge massive language models (LLMs) with limited computing sources could mean that AI firms might not need to buy or rent as a lot high-cost compute assets sooner or later. Its release could further galvanize Chinese authorities and corporations, dozens of which say they've began integrating DeepSeek models into their products. Chinese army analysts spotlight DeepSeek’s skill to improve intelligent choice-making in combat situations, optimize weapons methods, and enhance real-time battlefield evaluation. The United States’s skill to take care of an AI edge will depend upon a equally complete technique: one which establishes a durable policy framework to align private-sector innovation with nationwide strategic priorities.

For all latest news, observe The Daily Star's Google News channel. Deepseek free’s fast adoption and performance in opposition to competitors resembling OpenAI and Google sent shockwaves by way of the tech trade. "DeepSeek is pretty much the primary massive chatbot from outside the American Big Tech sector … With the ability to generate main-edge massive language models (LLMs) with limited computing sources could mean that AI firms might not need to buy or rent as a lot high-cost compute assets sooner or later. Its release could further galvanize Chinese authorities and corporations, dozens of which say they've began integrating DeepSeek models into their products. Chinese army analysts spotlight DeepSeek’s skill to improve intelligent choice-making in combat situations, optimize weapons methods, and enhance real-time battlefield evaluation. The United States’s skill to take care of an AI edge will depend upon a equally complete technique: one which establishes a durable policy framework to align private-sector innovation with nationwide strategic priorities.

Additionally, he added, DeepSeek has positioned itself as an open-supply AI mannequin, meaning builders and researchers can entry and modify its algorithms, fostering innovation and increasing its purposes past what proprietary fashions like ChatGPT permit. As remote work becomes more frequent, many builders like myself are actually beginning to journey extra. Deepseek had deliberate to launch R2 in early May but now desires it out as early as attainable, two of them mentioned, without providing specifics. Research and analysis AI: The 2 fashions provide summarization and insights, whereas DeepSeek promises to supply more factual consistency among them. While chatbots including OpenAI’s ChatGPT are usually not but powerful enough to straight produce full quant strategies, firms corresponding to Longqi have also been utilizing them to speed up analysis. The latest to hitch the growing list is the US, where the states of Texas, New York, and Virginia have prohibited authorities workers from downloading and using DeepSeek on state-owned gadgets and networks. South Korea, Australia, and Taiwan have also barred authorities officials from utilizing DeepSeek due to security dangers. The U.S. government ought to prioritize effective coverage actions, including permitting reforms to decrease obstacles to data heart growth, updating the aging U.S.

R2 is probably going to worry the U.S. AI’s vitality calls for, and increasing H-1B visa packages to maintain the U.S. Rivals are still digesting the implications of R1, which was constructed with less-powerful Nvidia chips but is competitive with these developed at the costs of lots of of billions of dollars by U.S. While leading AI firms use over 16,000 excessive-efficiency chips to develop their fashions, Free DeepSeek online reportedly used simply 2,000 older-generation chips and operated on a funds of less than $6 million. While the United States should not mimic China’s state-backed funding model, it additionally can’t depart AI’s future to the market alone. Republican Senator Josh Hawley has filed a bill "to prohibit United States persons from advancing artificial intelligence capabilities within the People's Republic of China". The United States won’t secure its AI dominance with chopping-edge models alone. Despite the bans, consultants argue that DeepSeek marks a big challenge to the US’ longstanding dominance in the AI sector. However, what sets DeepSeek apart is its use of the Mixture of Experts (MoE) structure, which allows the AI model "to consult many experts from numerous disciplines and domains" within its framework to generate a response. DeepSeek r1’s fashions make the most of an mixture-of-specialists structure, activating only a small fraction of their parameters for any given process.

DeepSeek claims to have constructed its chatbot with a fraction of the finances and resources usually required to prepare related fashions. As reasoning fashions shift the focus to inference-the method where a completed AI mannequin processes a person's query-speed and cost matter more. On RepoBench, designed for evaluating long-range repository-stage Python code completion, Codestral outperformed all three fashions with an accuracy rating of 34%. Similarly, on HumanEval to judge Python code technology and CruxEval to check Python output prediction, the mannequin bested the competition with scores of 81.1% and 51.3%, respectively. For instance, France’s Mistral AI has raised over €1 billion (A$1.6 billion) up to now to construct massive language models. ChatGPT developer OpenAI reportedly spent someplace between US$one hundred million and US$1 billion on the development of a very current model of its product referred to as o1. The Financial Times reported that it was cheaper than its peers with a worth of two RMB for each million output tokens. DeepSeek is cheaper than comparable US models. But "the upshot is that the AI fashions of the long run won't require as many excessive-end Nvidia chips as buyers have been counting on" or the large knowledge centers companies have been promising, The Wall Street Journal mentioned.

댓글목록

등록된 댓글이 없습니다.